Great read! Any news on the dev front that you can share with us?

Funny to see old names from back in 2014 that are still around.

Great read! Any news on the dev front that you can share with us?

Funny to see old names from back in 2014 that are still around.

Nice read to see the internal workings by the Developers. Personally I’d rather be patient and wait for a fully tested software rather than something that is released to the public and is full of bugs - which isn’t good for reputation or investors.

I’m in it for the long term, and can wait a few years if the end product is optimized and superior. I can cash out now and be a millionaire, or I can be patient, wait a few years and be a multi-millionaire and/or live off the transaction fees. By that time the Devs would be billionaires I hope as a result of their commitment and hard work, and they can enjoy the fruits of their labor.

@gimre in regards to websocket support, is goal of catapult to support both http/2 and websockets, or http/1.x + websocket support?

Also side couple side questions:

sorry for late answer:

right now it’s http/1 + ws, but due to more modular architecture of catapult, supporting http/2 shouldn’t be a big problem.

we’re trying our best, but it’s hard to judge, it’s possible we’ll share the docs for APIs earlier than catapult itself

there are two things: main p2p network storage - you probably won’t be able to change this.

api layer storage - that is pluggable, and if all will go well, you should be able to replace it, but you’ll have to write appropriate plugin on your own.

thanks for follow up,

couple ideas for you to think about as things progress:

actually it’ll be in reverse order, first we’ll have normal api, then we’ll add backward-compatibility layer.

gRPC - probably won’t be an option, cause p2p use custom binary format, but there’s catapult-sdk, that you’ll be able to use to talk to catapult p2p servers directly if you’ll need it

For gRPC was more referring to alternative/addition to the REST interface/layer. Its built in communication/streaming capabilities are great and auto built in support for generating many language bindings for 3rd party product integrations vs writing REST sdk’s from scratch in each language.

Hi,

Have you considered to use Akka (http://akka.io) for the catapult WebSocket support? It comes also with messages based on the Actor model. So you get a highly concurrent, scalable, event driven architecture, built on reactive streams, with only one package.

What was the reason to choose ZMQ over such a technology?

Best regards,

Christian

Catapult is written in c++ (server) and javascript (rest).

Akka on the other side is written in java, so it doesn’t fit in there.

Thanks for your response. I thought the REST API is written in Java. So it means that the new catapult REST API is based on node? If so, how do you make use of multiple threads for concurrency in node? I haven’t used node for server applications and I know there are options to bypass the single thread limitation.

This takes me also to the question why a dynamic typed, single threaded, node based solution was choosed for the API part instead of Akka or a similar technology?

Sorry if this sounds critical. This is not my intention. I’m only interested on which aspects technologies will be choosed for the NEM platform.

Best regards,

Christian

REST API is indeed based on node. All db calls are async (and mongodb is multi-threaded) so they should not be a bottleneck.

Node is widely used in many project. It looked like a good approach to us.

That’s interesting. Didn’t see that coming.

I always thought about looking at node but never really saw a reason to switch to it. Then there also were the forks and the left-pad incident which really turned me off to it.

I trust you guys though

plain javascript? no strongly typed language compiled to javascript?

Devs have a track record that speaks volumes, but I must say having multiple components in different languages, nodejs which is a nightmare to administer, plain javascript which doesn’t help for maintenance of large code bases, mongodb (dated article, but not reassuring: http://hackingdistributed.com/2014/04/06/another-one-bites-the-dust-flexcoin/) and a full rewrite wrapped in secrecy makes it look really scary as it is usually a recipe for disaster.

Let’s hope my fears will be laughed at when Catapult launches

that’s why you put things in docker containers

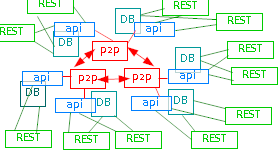

rest layer is a tiny piece. the real thing is the p2p/api servers behind.

in catapult mongo is not used as a storage for p2p network.

also: always make sure to, check your facts:

a) I don’t think it was ever mentioned that flexcoin (and poloniex for that matter) was using mongo

b) The author of article is involved in key-value solution hyperdex.org (it’s up to you to figure out motives)

c) (some additional reading: http://blog-shaner.rhcloud.com/the-real-bitcoin-nosql-failure/)

Rather than linking the article, which is an easy target to shoot down for the reasons you mention, I should have simply mentioned that the mongodb documentation still states today MongoDB does not support multi-document transactions.

The fears I expressed are due to:

As I said, I hope you guys prove me completely wrong when Catapult is released.

today MongoDB does not support multi-document transactions

Yes that is a known issue with MongoDB, but it is not something that is required. MongoDB is merely storing supplemental data and was chosen for high query performance to satisfy high TPS scenarios. Blocks - the “ground truth” of any blockchain - and certain calculated data are stored on disk in a different mechanism. In fact, backbone nodes have no requirement for MongoDB at all. MongoDB is only used to serve data to API clients.

a complete rewrite, with a risk of over engineering, leading to a solution complex and difficult to maintain

That is a risk of any large engineering effort, but I think we have things mostly under control. There has been a concerted effort to build everything with pluggability in mind in order to facilitate future development.

Could you help settle a debate I am having with a fellow NEM true believer? Will migrating the NEM blockchain to catapult require a hardfork?

Thanks @Jaguar0625 for chiming in. Happy to hear you consider to have things mostly under control.

I’m looking forward to seeing the result of the team’s hard work published as open source. That will be a huge milestone and should ease lots of fears. If it could bring more contributions to the development of NEM it would be even better!

I am also a little bit nervous, because of the complete rewrite:/

But I believe in you, you are working for such a long time on this project, you will do a great job!

I am probably one of the rare investors who are not impatient for the Catapult release, good things need time!

So don’t listen to the impatient people, take your time and keep up the great work!

updated OP