How will the 500 nodes differ in the test network (Configuration, OS, RAM, Installation from scratch / bootstrap / etc. … and so on) ???

Mmhh … Participation in the test network was not that high until now. If I saw that correctly.

Thx !

Give me a day or two to come back on this one. I need to have a chat with the test team but have a few things to catch up on after the testnet release work over the past week or so.

Very valid questions, I’ll try and come back asap

Hi @garm more information for you below, hope this helps:

From Public Slack over the weekend:

Link: https://nem2.slack.com/archives/CF1KY4EJJ/p1608528207010100

Wayon Blair 5:23 AM

Hi All,

We have kicked off another round of testing in testnet.

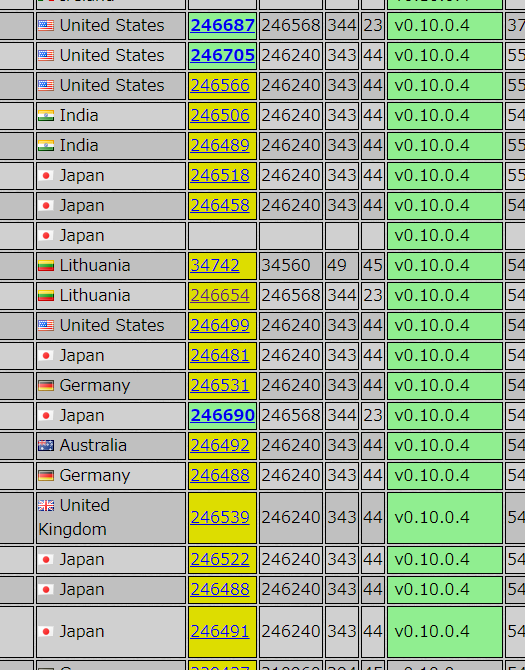

@RavitejAluru has scaled testnet nodes to over 500 nodes. This was done in couple of batches. The first set syncing around 300 nodes at once.There are currently over 300 voting nodes - http://api-01.us-west-1.0.10.0.x.symboldev.network:3000/finalization/proof/epoch/340

We kicked off our first spamming testing. You will notice a lot of transfer transactions for the next day or so.

We have more testing planned for the week including pushing testnet to its limits.

You might notice delays in your transaction getting confirmed

In terms of the nodes being added, the target is below, in addition to the existing ~100 nodes from community:

- Dual Nodes (API+Harvesting+Voting): 40 - 50 per AWS region

- Peer Nodes: 10 -20 per AWS region

- AWS regions: 7

ap-northeast-1, ap-southeast-1, eu-central-1, eu-west-1, us-east-1, us-west-1, us-west-2

Hardware configuration

- Dual Nodes: t3.xlarge, 4 x vCPU, 16GB

- Peer Nodes: t3.large, 2 x vCPU, 8GB

Testing Approach:

- Load injection tool sent txs at 100 tps network monitored for failures, errors, slowness etc for next few days

Currently experiencing delays in synchronizing a number of nodes.

Is there a problem?

and I have a question.

What is the acceptable level of delay in synchronization of nodes?

Question

Today 12/22

Many of the test nodes stopped and block generation stopped for a while. What kind of experiment was this?

Thanks both, just confirming what I said on Slack, there looks to have been an issue from some of the testing, the test team are working through what the cause is, I will come back as soon as possible on this one but it will take some time to work through.

@dusanjp it was a load test, designed to try and put a large load on the network

The test team have completed initial investigations, see below for the findings:

Summary

The good news is we have tested up to 100tps and the Testnet handled it with no problems.

The less good news is that at 130tps we found what looks like a MongoDB configuration issue; this is being validated but it looks almost certain at this stage to be the cause.

That issue caused the affected nodes to fail and finality to stop because they were voting nodes.

What happened

-

Over the weekend we ran a test up to 100 tps (Transfer Transactions) and it passed no problem

-

Last night UTC we started a test at 130 tps (Transfer Transactions) and it caused some memory issues on MongoDB

-

Those issues caused the NGL nodes to start failing as MongoDB gradually used more and more memory, because those nodes were voting nodes, finality stalled, however block production is still progressing.

-

Separately, there have been 6-7m transactions added over the past few days and as a result the chain is now about 40-45GB, a dual node it will use more space than than again, so some nodes with smaller disks may also have found that caused challenges

Next Steps

The below is the plan from here:

-

Get Testnet back up and running normally, we need to unregister the failed nodes from the voting pools and let finality sort itself out, hopefully within the next 24 hours, possibly sooner, a message will be put out on the public slack channel to confirm when complete

-

There is a setting that can be used to throttle the memory usage on MongoDB which we will test locally to ensure it meets the needs

-

Assuming point 2 works, this will be rolled out across the NGL nodes and instructions given for community nodes (and a default added to bootstrap) and then we will re-run the test.

We are planning the stress test festival at NEMTUS on December 27th. The test tool is also available. However, at the moment, the network is unstable, and even if we request test XYMs from the faucet, it is difficult to approve it. Will this be improved early?

When is the deadline for the testing period to be able to launch on schedule?

Is there a disconnect between NGLs and the perception of core developers?

There is no need to force a launch.

Why did most of the staff perform stress tests when they were on vacation in the first place?

Maybe it was because I was satisfied with the pretest at 100tps and made an easy estimate.

For Japanese people, taking a vacation in this situation is very annoying and questionable, but it can’t be helped for a healthy life.

Often the time estimates are really sweet. Even if the launch is delayed, there could even be a danger of the delist.

Yes, I caught Jaguar’s tweets in real time and sent him a mention.

The core developers are working hard.

Is there a disconnect between NGLs and the perception of core developers?

No, we are all working together as a single team to work through the issues

Why did most of the staff perform stress tests when they were on vacation in the first place?

Several stress tests have been performed in development environments over the past few months, they did not show issues, the first tests on Testnet also showed no issue up to 100tps. It was the last test(s) that was meant to go above 100tps that found the issue(s).

They may have been able to be performed earlier (as per Jag’s tweet) but could have been on the final code version or and the Testnet needed to be recovered from the upgrade issue, so they still would have had to be retested with the bigger Testnet chain because it is the place that has a Mainnet like network and chain.

Often the time estimates are really sweet

Sorry I don’t understand the term ‘sweet’ in this sentence, I saw it in some of the machine translations on twitter as well and didn’t understand those either. I will ask one of our team to interpret for me and reply if possible.

His word “sweet” means “loose” or “lax”.

and Sweet means thoughtless.

Are the specifications for Symbol’s tps performance still undecided?

Is it in the process of being tuned?

The below is written with the best information we have at this time, some of it may change as investigation continues.

I hope it helps with some of the conversations. The summary is that we all want the same answers (including the wider community, devs, me and the rest of the management team). It its not that information is known and is not being published; investigation needs to complete to have the information to communicate and that takes time. I know it is hard given the stage of plan, time of year, length of time waited already etc and am sorry to have ask for further patience, however there is no other option than to ask for it while the investigation work completes.

In terms of availability: I will be mostly online all through the festive period and on most of the normal channels; with exception of the 25th of Dec when I will be with family. Feel free to ping me on whichever one suits you the best and I hope everyone who is celebrating has an enjoyable Christmas and New Year period

DaveH

Are the specifications for Symbol’s tps performance still undecided?

The specifications were to meet 100tps, which it passed.

Is it in the process of being tuned?

The 130tps test was to check how it would respond if 100tps was exceeded. It found two problems (copied from Jag’s tweet) some of which is node/network tuning but the tps target itself is not being tuned/changed.

1. MongoDB usage / configuration => causes large memory usage and crashes in broker and REST`

This is possibly config/tuning/throttling but investigation is ongoing

2. Unconfirmed transactions => causes large memory usage in server`

Is being looked into in the code to see how best to handle this situation, the existing approach catches most but not quite all of it. It may also be tuning/config but investigation is ongoing

A few other questions from telegram etc I will try and wrap up together, paraphrased:

Given the test at 100tps was successful can we just cap it at 100tps for now?

Is being looked at as an option, if it is done, nodes & the network must be able handle and recover from numbers above that cap (see issue 2 above). It requires thought and consideration to ensure it can defend from a DoS type issue if the tps were to spike. Testnet must also to be running normally to be able to retest it (see below) if it is capped

So yes it is an option, but to get to that option, the investigation needs to complete and is ongoing.

Why is Testnet not running normally?

To perform the final stress tests, Testnet had to scale to a Mainnet number of nodes - and the number of voting nodes had to scale. NGL scaled these as was planned, but that meant a super-majority were run by NGL as a result; not a normal Mainnet scenario.

Testing caused the NGL nodes to fail due to issues above. This left the NGL nodes in a poor state (did not fail gracefully), those nodes now need to be rectified which is being worked on in parallel, updates will be provided as progress occurs. The network is still generating blocks, but not finalising, I have synchronised a dual node overnight from block 0.

As the troubleshooting occurs, it is likely to place load/errors on other nodes so non-NGL nodes may experience unstable performance while the troubleshooting is occurring, which some people are seeing.

On Mainnet this ownership and hosting is decentralised and rather than being a single test pool at NGL, a super-majority of distributed nodes would need to fail in a similar way to occur. By resolving the problems noted by Jag above, they would fail gracefully and recover. So the current Testnet behaviour is a direct result of having to scale Testnet centrally for testing.

We will update as information is available on getting Testnet running normally.

Is launch going to be delayed

This risk exists, has been known to exist and been communicated throughout this plan. Until the above investigation is complete it is not yet known if it is necessary. The decision to delay will not be taken lightly (obviously) and IF it needs to be taken, information is needed to be able to say for how long. That information is not available until the investigation completes.

Jag has taken the step of asking NemTus to postpone testing until investigation is more advanced for the same reason, thank you @h-gocchi for responding quickly and co-ordinating the change.

There is no intention to force a launch of something that is not ready, nor is there an intention to delay unless is necessary - investigation by the devs needs to complete before this can be known either way. The teams are working actively (the majority are present during Christmas period generally).

Estimations being Sweet/Lax/Loose/Thoughtless/Etc

Thanks for the clarifications above on the term @tresto @GodTanu

Estimations are made with the best knowledge that is present when they are made, they have been agreed and communicated jointly with the Core Developers, NGL Developers and NGL Exec team prior to any communication.

They have always been communicated as estimates with a level of risk. Risk decreases over time but cannot be removed entirely. As more information is known, they have been adjusted over time and as more information is known from this investigation they will be adjusted if necessary, or confirmed that it is not necessary.

Thank you and congratulations with the courage to communicate about these details. This is the kind of transparency that is needed, certainly and most wanted in situations as this.

Thanks for your report.

I can understand if unforeseen problems arise and the launch date is postponed to resolve them. However, I think it was not a good idea to announce the number of snapshot blocks, etc. when the outlook was not yet definite. This is because the postponement announcement (or anxiety about postponement) will cause a lot of people (but that’s only for the information poor) to lose money in the market. I think you should take this into consideration a little more.